Building an Autonomous Agent to Play Text Adventures (LLM + MCP) 🕹️🤖

- Building an Autonomous Agent to Play Text Adventures (LLM + MCP) 🕹️🤖

- What I built 🧰

- The assignment goal 🎯

- Architecture: a clean separation of “tools” and “brain” 🏗️

- Why MCP is a great fit for this 🔧

- Implementation: the MCP server (tool layer) 🛠️

- Implementation: the ReAct agent (observe → reflect → act) 🤖🧠

- Example gameplay (from a real run) 🎮

- Results so far 📊

- The platform: Huggingface as the LLM provider ☁️

- Extra things I built (that made this fun) ✨

- Things I learned 📝

- More to explore 🚀

- References 🔗

Building an Autonomous Agent to Play Text Adventures (LLM + MCP) 🕹️🤖

As a kid, I spent hours playing games like Sam & Max Hit the Road and Monkey Island. They aren’t strictly text adventures, but the core loop is the same: explore, read clues, try actions, get stuck, backtrack, and slowly progress in this parallel universe.

This project was my attempt to automate that loop: an autonomous agent that plays classic text adventure games end-to-end—no human typing—just a language model choosing actions through a tool interface.

This project was part of the 2026 LLM Course taught by Nathanaël Fijalkow and Marc Lelarge. Thank you for this fun project!

—

—

What I built 🧰

- A MCP server that exposes a text adventure game as a small set of tools like “play an action”, “check memory”, “see the map”, and “check inventory”.

- A ReAct-style agent that loops: 1) observe the latest game output, 2) think about what to do next, 3) call a tool, 4) repeat.

- Logging + visualization so I can debug agent behavior without reading endless terminal outputs.

The assignment goal 🎯

Use a MCP server to interact with the game, and use a language model to understand the game state and make decisions—so the agent can explore and solve puzzles autonomously.

A text adventure is a perfect “agent playground”:

- It’s stateful (your actions change the world).

- It’s language-driven (observations and actions are text).

- It requires long-horizon reasoning (keys, doors, light sources, multi-step puzzles).

- It punishes shallow patterns (you can loop forever doing “look”).

Architecture: a clean separation of “tools” and “brain” 🏗️

The system is split into two major pieces:

- MCP server = capabilities

- Wraps the game environment (Jericho/Z-machine)

- Exposes tools like

play_action,memory,get_map,inventory

- Agent client = decision-making

- Calls the LLM

- Chooses which tool to call next

- Keeps lightweight state (recent actions, stagnation detection, etc.)

In this repo, the runner launches them together and connects over stdio:

- Runner:

run_agent.py - Server:

mcp_server.py - Agent:

agent.py

At runtime it looks like:

run_agent.py

-> launches mcp_server.py as a subprocess (GAME=zork1)

-> connects via FastMCP client (stdio transport)

-> agent calls tools in a loop

Why MCP is a great fit for this 🔧

MCP is a strong match for autonomous agents because it forces good boundaries:

- Stable interface: the agent only needs tool names + JSON args, not emulator internals.

- Debuggable runs: you can inspect what the agent asked the server to do vs what actually happened.

- Swap models easily: the tool layer stays constant even if you change LLMs.

- Encourages structured state: tools like

memoryandget_mapprovide anchors that pure text often lacks.

In short: MCP makes it feel like you’re building a system, not just prompt-chaining.

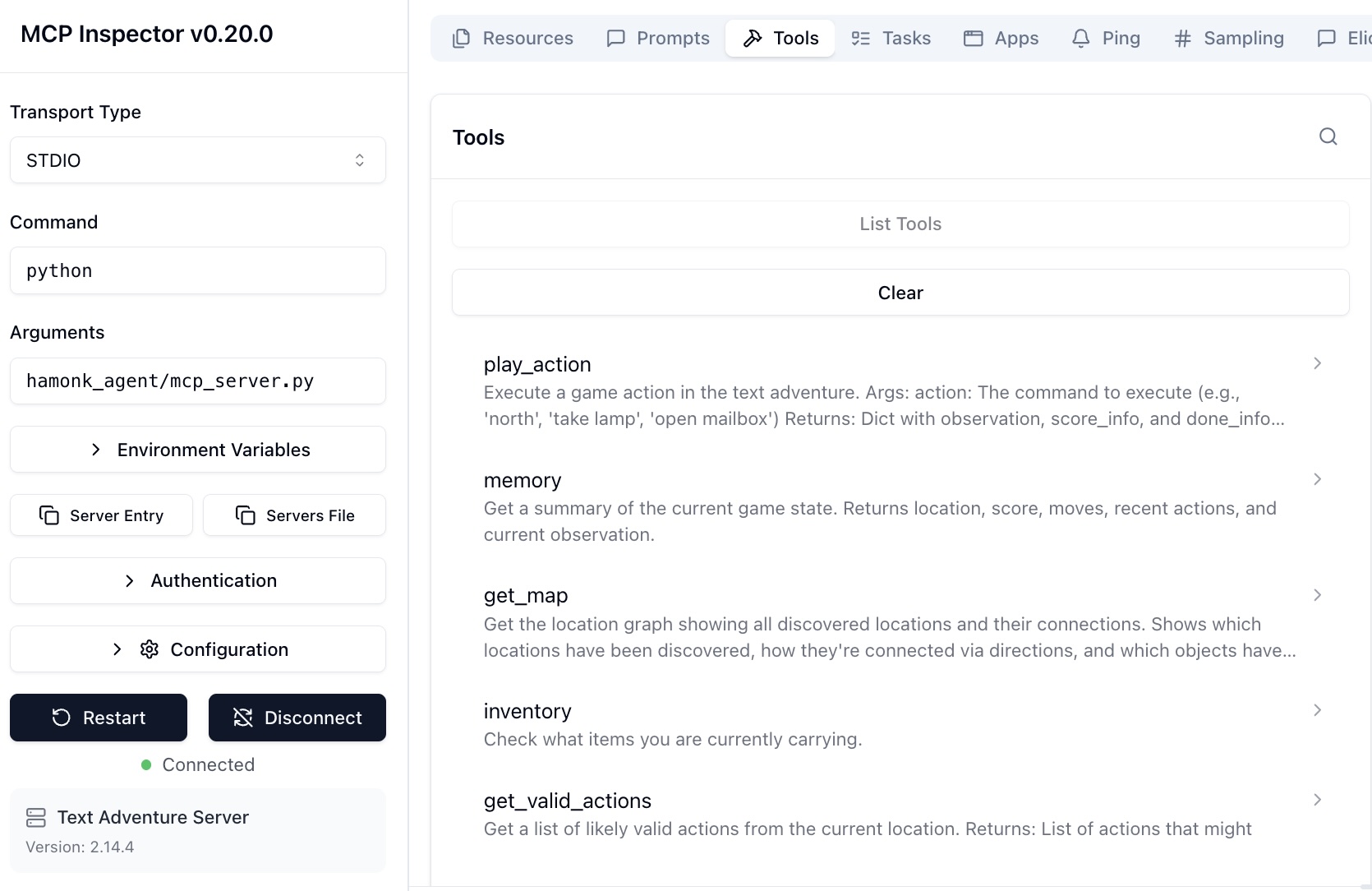

Debugging MCP is also handy via the MCP inspector.

You can see the defined tools and can interact with them directly in this UI.

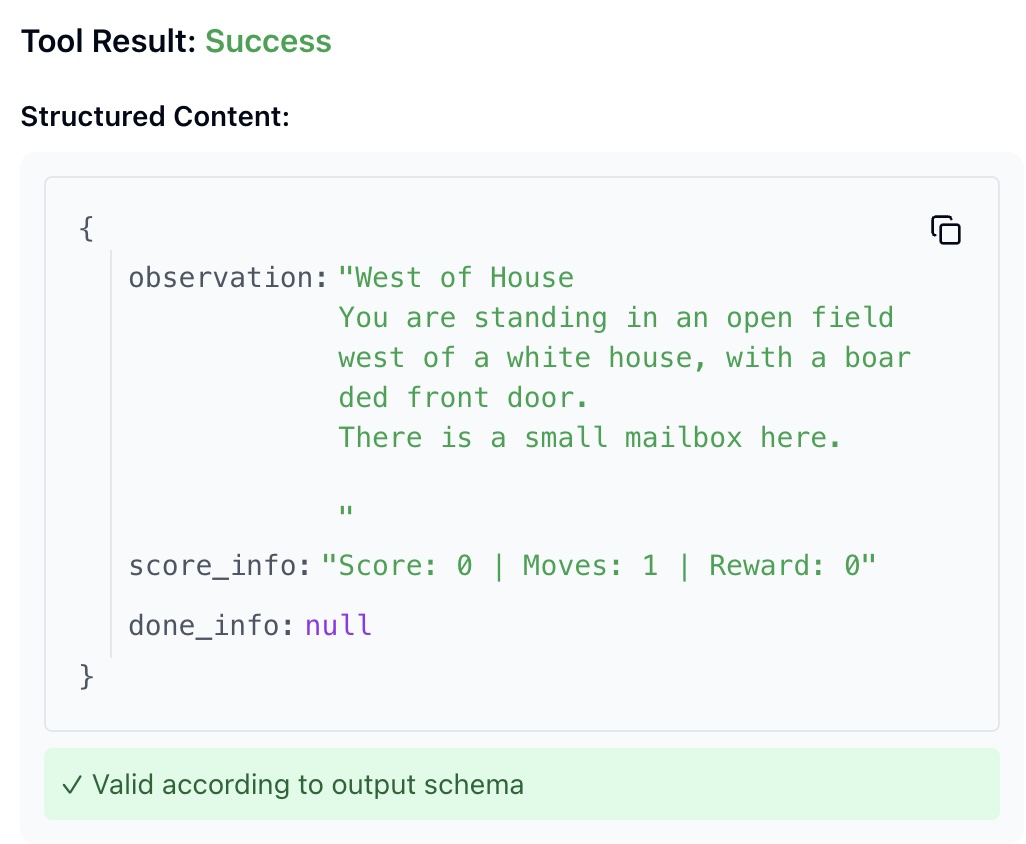

Implementation: the MCP server (tool layer) 🛠️

The MCP server wraps a game environment and adds “agent-friendly” structure.

Tools exposed

A typical minimal toolbox looks like:

play_action(action: str) -> str— send a game command likenorth,open mailbox,take lamp.memory() -> str— summary: current location, number of discovered locations, recent actions, current observation.get_map() -> str— a discovered location graph inferred from movements.inventory() -> str— what the player is carrying.

Why the server does more than “just return text”

Raw game text is rich but messy. The server can cheaply provide structure that helps the agent reason:

- track unique locations (often with an internal location ID),

- build a location graph from movement actions,

- extract objects in a room (I tried using small LLM for that task / text from those games can be obscur and confusing on purpose)

- emit event logs for post-run analysis.

That little bit of structure dramatically improves reliability.

Implementation: the ReAct agent (observe → reflect → act) 🤖🧠

The agent uses a strict, parseable format:

THOUGHT: <brief reasoning>

TOOL: <tool_name>

ARGS: <JSON>

This is one of the highest-leverage decisions in the whole project: it keeps tool invocation machine-readable and makes failures easier to diagnose.

The loop

Each step:

- 1) Observe the latest game output (usually from

play_action). - 2) Reflect using the LLM and short-term state (recent actions, discovered locations, etc.).

- 3) Act by calling one MCP tool.

- 4) Update counters + logs.

Exploration-first strategy

My current agent is explicitly biased toward exploration:

- interact with objects mentioned in the room description,

- try cardinal directions systematically,

- avoid repeating actions like “look” in loops,

- when stuck, consult

get_map(throttled so it doesn’t spam).

This doesn’t solve Zork on its own, but it is capable of exploring Zork world and unlocking clues!

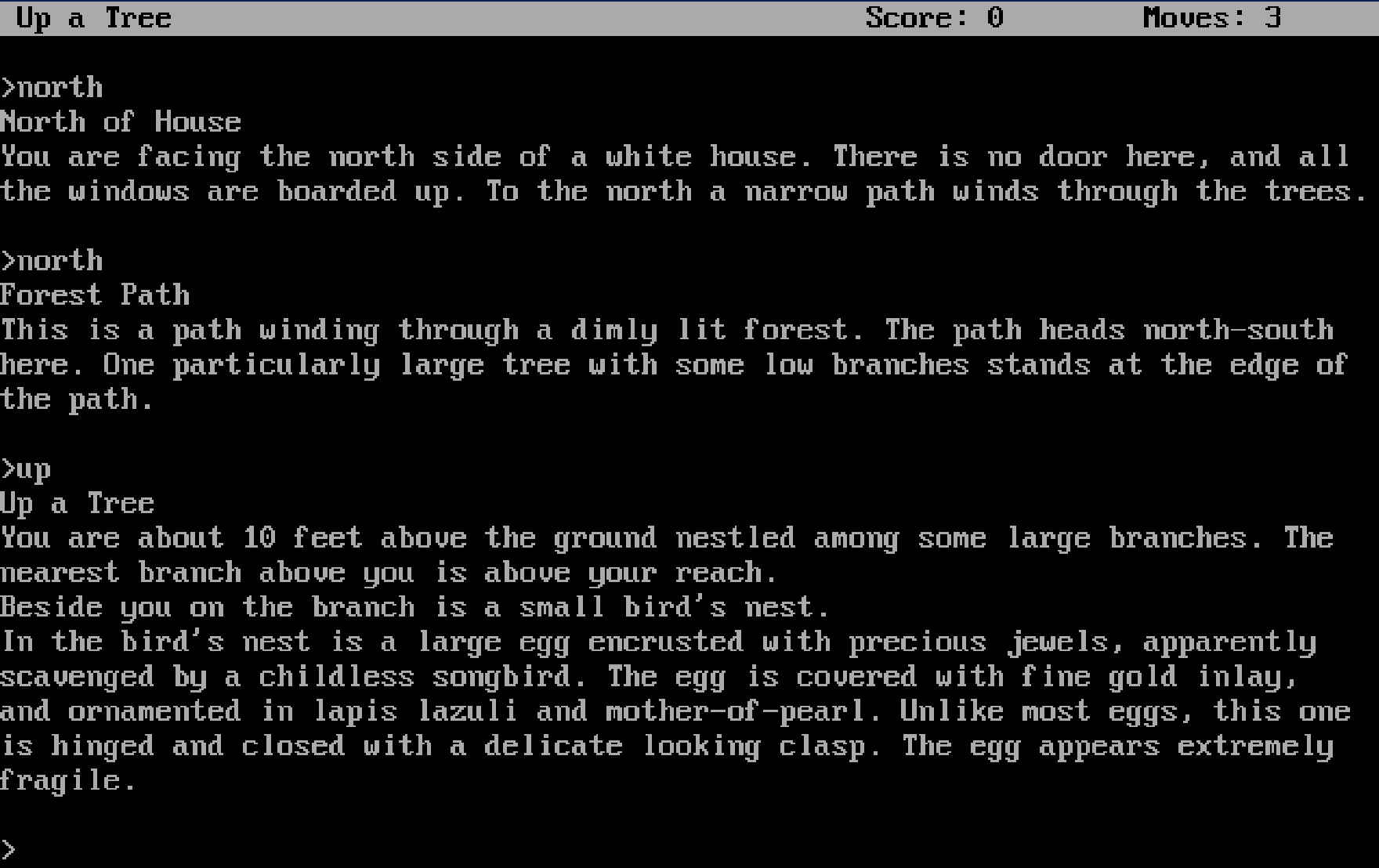

Example gameplay (from a real run) 🎮

This excerpt is adapted from a Zork run. I’m including the key steps rather than the full transcript.

The agent starts outside the white house, sees a mailbox in the description, and immediately interacts with it:

- Step 1

- Thought: mailbox mentioned → interact

- Action:

open mailbox - Result: discovers a leaflet

Then it takes and reads the leaflet (classic Zork onboarding), and continues exploring north into the forest.

A few steps later, it notices a climbable tree and finds something valuable:

- Step 6–7

- Action:

climb tree→ discovers a nest - Action:

take egg→ score increases

- Action:

Eventually, after exploration stalls, it consults the map tool:

- Step ~16

- Tool:

get_map - Result: a location graph with discovered rooms + connections

- Tool:

That’s the pattern I was aiming for:

- read the world

- act on specific details

- use tools when stuck

- don’t spin in circles forever

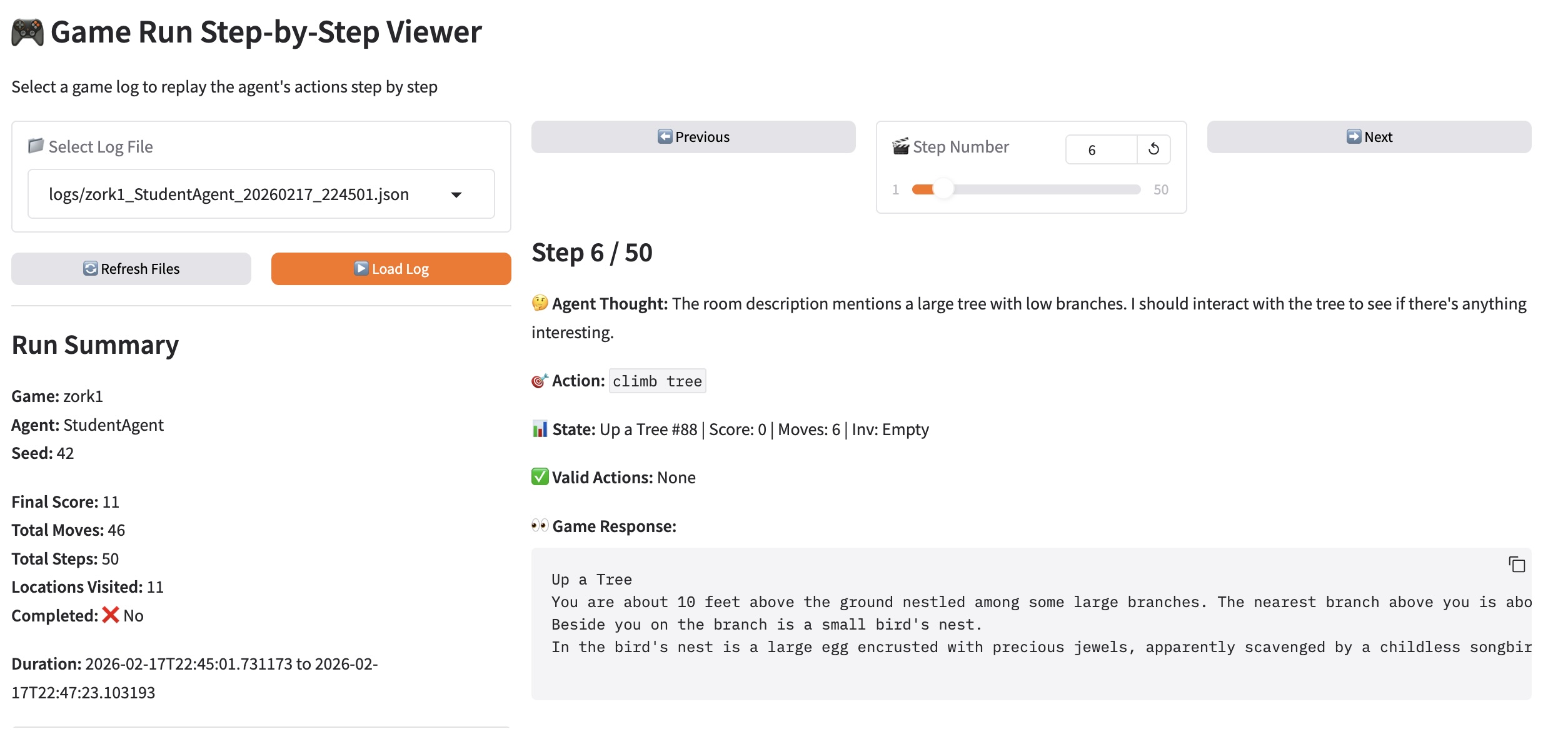

The gradio UI I built to debug runs:

Results so far 📊

From a recent structured run log (logs/zork1_StudentAgent_20260217_224501.json):

- Game:

zork1 - Max steps: 50

- Final score: 11

- Final moves: 46

- Unique locations visited: 11

- Completed: no (not within the step limit)

This is not “solving Zork” yet, but it’s a solid exploration baseline. The harder part is long-horizon puzzle solving: remembering constraints, forming hypotheses, and executing multi-step plans reliably.

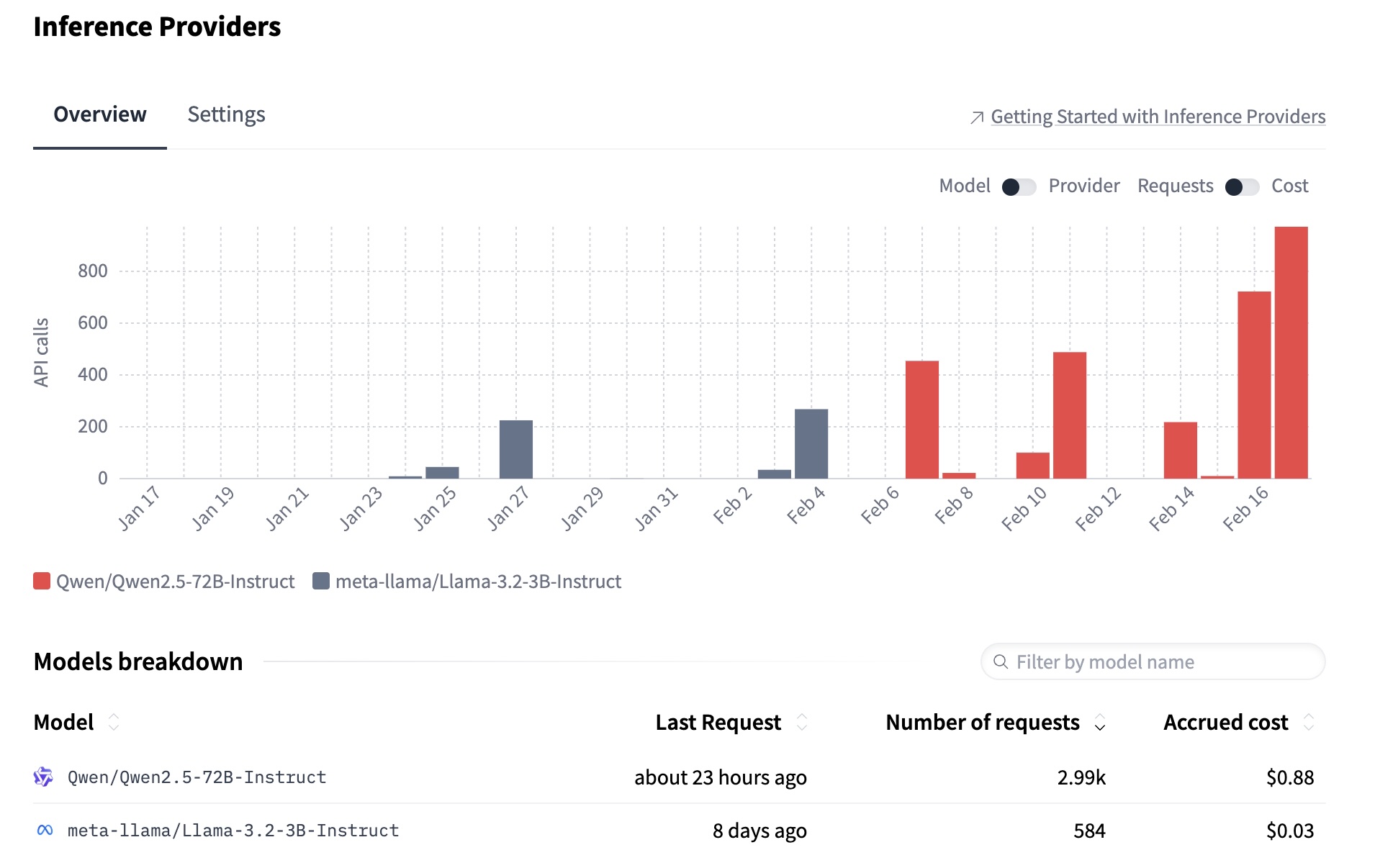

The platform: Huggingface as the LLM provider ☁️

I used Huggingface Inference as the LLM backend (via huggingface_hub.InferenceClient).

I paid the $10 for the month I was going to work on this project.

What I liked:

- easy to get started (token + model name),

- easy to try multiple models with the same interface,

- good fit for experiments and iteration.

Extra things I built (that made this fun) ✨

A few “support tools” made development much smoother:

- Run logging: every step records thought/tool/args/result, plus score, moves, location, and inventory.

- Jericho interface exploration: small scripts to understand available methods and game internals.

- Gradio run visualizer: a UI to load logs and replay the run step-by-step, plus charts and a location graph (

visualize_runs.py).

This tooling matters because agents fail in weird ways. Without observability, you end up guessing.

Things I learned 📝

- Huggingface makes it easy to experiment with multiple LLMs.

- Tool-first architecture (MCP) keeps complexity contained.

- ReAct is a practical scaffolding pattern: not magic, but it makes the system testable.

- Debugging agents is mostly about loop prevention, state representation, and failure recovery.

More to explore 🚀

Next steps I’m excited about:

- Persist the map across runs (so exploration compounds instead of resetting every time).

- Persist “best ideas” (successful sub-plans, hypotheses, puzzle notes).

- Interrupt + resume mode (pause an agent, inspect state, continue without restarting).

- Multi-agent setups:

- one agent explores and maps,

- one agent focuses on puzzles/quests,

- one agent manages inventory and experimentation.

- Other projects that could use this architecture: any stateful environment with a small tool API.

References 🔗

- ZorkGPT (adaptive play, multi-agent ideas): https://github.com/stickystyle/ZorkGPT

- Hinterding’s Zork + AI writeup: https://www.hinterding.com/blog/zork/

Two points I strongly agree with:

- Current limitations: AI can parse local context but struggles with long-term logic + spatial reasoning.

- “Vibe coding” risk: rapidly iterating with AI without refactoring can lead to a fragile, overly complex codebase.